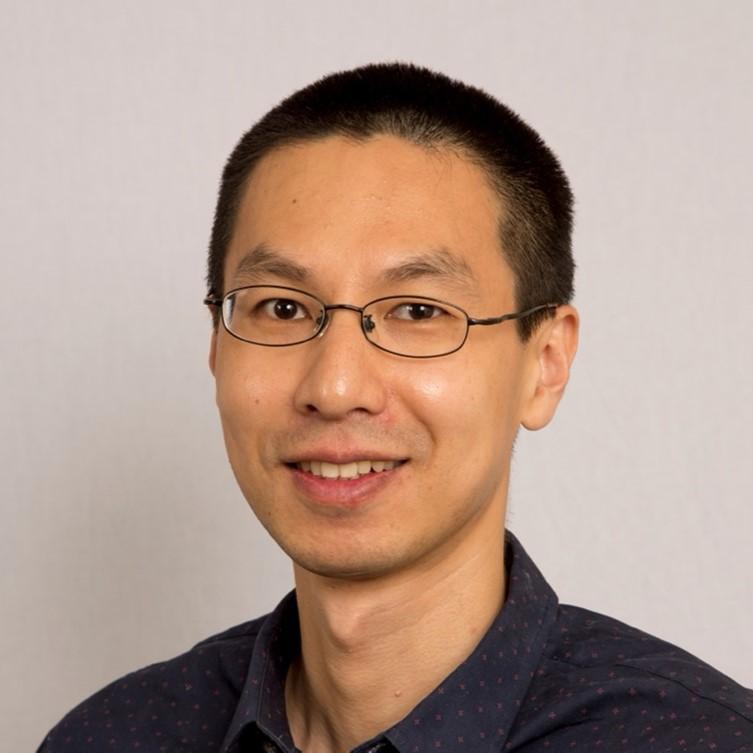

Dr. Jing Xu is Head of Propositions Research at Cambridge University Press & Assessment, part of the University of Cambridge. His research interests are in the application of cutting-edge technologies, particularly AI technologies, in language assessment and learning and the related validity issues. His current work focuses on 1) automarking of L2 English speaking and writing assessments and 2) validation of spoken dialogue systems. He was the winner of the 2017 Jacqueline A. Ross Dissertation Award and the 2012 International Language Testing Association (ILTA) Best Article Award. He was also the runner-up of the 2023 ILTA Best Article Award. Currently Jing is Co-Chair of the Automated Language Assessment SIG in ILTA, along with Dr Xiaoming Xi. Before that, he served as an editorial board member for the Language Assessment Quarterly journal and treasurer for the British Association for Applied Linguistics Testing, Evaluation and Assessment SIG. He received his PhD in Applied Linguistics and Technology from Iowa State University with a focus on language assessment.

Recently, the rapid advancement of machine learning technology has prompted an increasing use of automarking in large-scale language assessment. Automarking has emerged as a promising solution to the growing demand for efficient and reliable assessment. However, language assessment professionals have voiced scepticism and concern on the validity of automated assessment. Some key issues raised include construct coverage, marking accuracy, explainability, dealing with aberrant responses, and the impact on learning. There is a general consensus that a lack of public awareness of the limitations of automarking can lead to misinterpretations of test scores and misuses of automated assessments.

Building on these opportunities and risks, the aim of this paper is to present key principles underlying good practice for using automarking technology in language assessment and to illustrate how these principles are applied in Cambridge’s communication-oriented English proficiency exams. Two automarker evaluation studies, one on a speaking test and the other on a writing test, will be briefly overviewed. The innovative statistical techniques used, such as limits of agreement, weighting, and earth mover's distance, have advanced understanding of the complex nature of automarker evaluation. The practice of hybrid marking, in which human marking is used to mitigate the risk of automarking inaccuracies, will be discussed. The paper calls for more transparency in automarker research, more consistency among test developers in presenting validity and reliability evidence for automarking as part of a principled approach, and a collaborative effort between language assessment and machine learning researchers and practitioners to improve the explainability of automarking.